I am known as a strong ZFS Boot Environment supporter … and not without a reason. I have stated the reasons ‘why’ many times but most (or all) of them are condensed here – https://is.gd/BECTL – in my presentation about it.

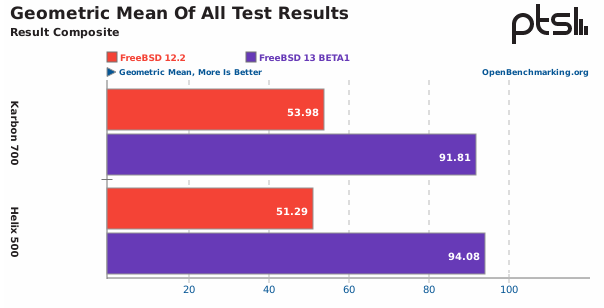

The upcoming FreeBSD 13.0-RELEASE looks very promising. In many tests it is almost TWICE as fast as the 12.2-RELEASE. Ouch!

The detailed tests are available on the phoronix.com site.

Having 12.2-RELEASE installed I wanted to check 13.0-BETA* to check if things that are important for me – like working suspend/resume for example – work as advertised on the newer version. It is the perfect task that can be achieved by using ZFS Boot Environments.

In the example below we will create entire new ZFS Boot Environment with clone of our current 12.2-RELEASE system and upgrade it there (in BE) to the 13.0-BETA3 version … and there will only be required on reboot – not three as in typical freebsd-update(8) upgrade procedure.

I assume that you have FreeBSD 12.2-RELEASE installed with ZFS (default ZFS FreeBSD install) and its installed in UEFI or UEFI+BIOS mode.

Here are the steps that will be needed.

(host) # beadm create 13 # create new '13' ZFS Boot Environment Created successfully (host) # beadm mount 13 /var/tmp/BE-13 # mount new '13' BE somewhere Mounted successfully on '/var/tmp/BE-13' (host) # chroot /var/tmp/BE-13 # make chroot(8) into that place (BE) # mount -t devfs devfs /dev # mount the devfs(8) in that BE (BE) # rm -rf /var/db/freebsd-update # remove any old patches (BE) # mkdir /var/db/freebsd-update # create fresh dir for patches (BE) # freebsd-update upgrade -r 13.0-BETA3 # fetch the patches needed for upgrade (BE) # freebsd-update install # install kernel and kernel modules (BE) # freebsd-update install # install userspace/binaries/libraries (BE) # pkg upgrade # upgrade all packages with pkg(8) (BE) # freebsd-update install # remove old libraries and files (BE) # exit # leave chroot(8) environment (host) # umount /var/tmp/BE-13/dev # umount the devfs(8) in that BE (host) # beadm activate 13 # activate new '13' BE Activated successfully

I am using mine sysutils/beadm for the process but you as well may use the bectl(8) from FreeBSD base system.

We will also need new FreeBSD loader(8) which will be updated this way – thanks to @JeffSipek for pointing that out.

On my system FreeBSD is installed on ada1 device.

(host) # gpart show -p ada1 | grep efi # find UEFI msdosfs(5) partition 40 409600 ada1p1 efi (200M) # <-- this one (host) # mount_msdosfs /dev/ada1p1 /mnt # mount it under /mnt (host) # find /mnt # display its contents /mnt /mnt/efi /mnt/efi/boot /mnt/efi/boot/bootx64.efi # update bootx64.efi file (host) # cp /boot/boot1.efi /mnt/efi/boot/bootx64.efi # copy from /boot/boot1.efi file (host) # umount /mnt # unmount /mnt filesystem

There is small chance that you will not be able to mount the efi partition. Even fsck(8) is not able to help here.

Typical errors that some users faced look like that:

(host) # mount_msdosfs /dev/ada1p1 /mnt # error when trying to mount efi partition mount_msdosfs: /dev/ada1p1: Invalid argument (host) # fsck_msdosfs -y /dev/ada1p1 # error when trying to fsck(8) that partition ** /dev/ada1p1 Invalid signature in boot block: 0b6a

If you hit that problem then first backup your current efi partition to for example /BACKUP.ada1p1 file.

(host) # dd < /dev/ada1p1 > /BACKUP.ada1p1 bs=1m

Now we will create fresh efi partition from scratch.

(host) # newfs_msdos -F 32 -c 1 /dev/ada0p1 # create new FAT32 partition (host) # mount_msdosfs /dev/ada0p1 /mnt # mount it under /mnt (host) # mkdir -p /mnt/efi/boot # create needed directories (host) # cp /boot/loader.efi /mnt/efi/boot/bootx64.efi # copy from /boot/loader.efi file (host) # umount /mnt # unmount /mnt filesystem

Now you should have new ‘working’ efi partition.

The last step is to reboot(8) into the new 13.0-BETA3 system.

(host) # reboot

If you find any problems with new bootloader not being able to load your new FreeBSD then you may alternatively copy the /boot/boot1.efi instead of /boot/loader.efi into the /mnt/efi/boot/bootx64.efi place.

Keep in mind that if you boot from geli(8)encrypted system then /boot/loader.efi is mandatory and you will not be able to boot if you would use /boot/boot1.efi file instead.

Done.

You should now see the new FreeBSD loader(8) in all its glory 🙂

You may now enjoy latest FreeBSD 13.0-BETA3 installation.

Same steps will be required to update to soon to be available FreeBSD 13.0-RC* (RC1/RC2/RC3) version and finally FreeBSD 13.0-RELEASE hopefully somewhere in March 2021.

UPDATE 1 – What if Everything Went Fine

You now have most up to date FreeBSD system that should work faster then 12.2-RELEASE and you still has your older 12.2-RELEASE Boot Environment that you can go back to if you find any problems with 13.0 version.

On my system it looks like that:

(host) # beadm list

BE Active Mountpoint Space Created

12.2 - - 6.5G 2021-02-12 10:15

13 NR / 18.8G 2021-02-13 11:32

The Space column is little misleading as it takes into account snapshots space used for example. To get exact information each Boot Environment takes use -D option. This way you will get information about each Boot Environment space separately.

(host) # beadm list -D

BE Active Mountpoint Space Created

12.2 - - 9.8G 2021-02-12 10:15

13 NR / 9.6G 2021-02-13 11:32

I will be keeping the 12.2-RELEASE Boot Environment for a while – maybe I will delete it a month or so after 13.0-RELEASE is available but if you tested all your needs and feel that 13.0 fulfills all your needs the same way or better then 12.2-RELEASE then you may delete that older Boot Environment with below command.

(host) # beadm destroy 12.2

UPDATE 2 – What if Something Goes Wrong

Generally if the new BE named ‘13‘ does not boot (or hangs at boot) then just select your earlier Boot Environment that you used before the upgrade – the one that has 12.2-RELEASE inside it.

You now have the system that worked for you before we proceed to the upgrade process.

If that fails (or bootloader is broken) then grab the FreeBSD-13.0-BETA3-amd64-memstick.img image and write it on some pendrive with dd(8) command.

# dd if=FreeBSD-13.0-BETA3-amd64-memstick.img of=/dev/da0 bs=1M status=progress

As you now have the pendrive with FreeBSD 13.0-BETA3 then you may boot from it and fix your installation. Pick LiveCD after its loaded. Then type root at login: prompt and hit [ENTER] for empty password.

The list of tasks that can be done now depends on what is broken and I can not guess every possible error and fix scenario so if you hit any problems during that upgrade process then just contact me with your preferred way and we will figure something out.

UPDATE 3 – Faster Upgrade with New beadm(8) Version

Today (2022/05/06) I introduced new beadm(8) version 1.3.5 that comes with new chroot(8) feature. It has already been committed to the FreeBSD Ports tree under 263805 PR so expect packages being available soon.

You can also update beadm(8) directly like that:

# fetch -o /usr/local/sbin/beadm https://raw.githubusercontent.com/vermaden/beadm

Now for the faster update process – here are the instructions depending on the shell you use.

- ZSH / CSH

# beadm create 13.1-RC6 # beadm chroot 13.1-RC6 BE # zsh || csh BE # yes | freebsd-update upgrade -r 13.1-RC6 BE # repeat 3 freebsd-update install BE # exit # beadm activate 13.1-RC6 # reboot

- SH / BASH / FISH / KSH

# beadm create 13.1-RC6 # beadm chroot 13.1-RC6 BE # sh || bash || fish || ksh BE # yes | freebsd-update upgrade -r 13.1-RC6 BE # seq 3 | xargs -I- freebsd-update install BE # exit # beadm activate 13.1-RC6 # reboot

Happy upgrading 🙂

EOF

Thanks for the post! (despite using boot environments). This part looks scary to be honest.

(despite using boot environments). This part looks scary to be honest.

What are the risks of making a mistake when installing the new bootloader? Can the system become unbootable because of this?

LikeLike

Thanks 🙂

Unbootable system 🙂

Unfortunately ZFS Boot Environments rely on the loader(8) but even if you break this loader your data and your files are still on the disk. Even if for some reason that I am not able to think of right now you will mess the loader(8) to so the reinstall will be needed you only have broken the loader(8) – you did not broke your files or your data – which for me is most important thing.

That is why its best to have a USB pendrive with 13.0-BETA3 (or the version you are upgrading to) on it before the upgrade to eventually use it as rescue system to fix things.

Regards.

LikeLike

Assuming you use UEFI, if your ESP is large enough, you could copy your current loader.efi to loader.old.efi before copying the latest version to the ESP. If you have enough space to accomodate, say rEFInd, you can have a boot menu letting you choose between loader.efi and loader.old.efi. In my case, I have gone as far as also having loader.good.efi as a selection. This file is updated when a new boot loader is needed due to changes in ZFS features and only after the new boot loader is indeed verified to work properly. This setup has saved my bacon at least once. Note that rEFInd remembers your last selection, which may be undesireable for server systems.

LikeLike

Good point.

Having such rEFInd setup sure protects you from loader problem but having a prepared USB pendrive will also do in most cases … but will take more time to recover 🙂

LikeLike

It is not explained where ‘/var/tmp/BE-13.z4IanfZt’ is found…how is that path determined?

LikeLiked by 1 person

I added the outputs of beadm(8) commands so now it will be known why I entered that directory and not another.

LikeLiked by 1 person

# beadm create 13.0.-BETA4

# beadm mount 13.0.-BETA4 /tmp/13.0-BETA4

# chroot /tmp/13.0-BETA4

– by preferring an explicit mount point at mount time, on-screen randomness is prevented.

For the purposes in this blog post, /tmp/13.0-BETA4 should be as good as /var/tmp/13.0-BETA4 – true?

For superior performance, my /tmp is type tmpfs (not zfs).

LikeLike

Yes, one can specify where to mount it.

The tmpfs indeed can be faster but I did not made any tests.

One reason why I moved the default mount directory for beadm(8) from /tmp to /var/tmp is that one time I left the BE mounted at /tmp/somewhere and because of the clear_tmp_enable=YES option in the /etc/rc.conf caused it to be deleted 🙂

Regards.

LikeLiked by 1 person

Why do you use mount_msdosfs, as some other mount command?

Why is a mount necessary? I think it is because you are in a chroot? If so, this could also be done from outside the chroot, via another ssh / terminal session?

LikeLike

Its needed to update the loader(8) on UEFI partition – and that UEFI partition uses FAT32 which is mounted by mount_msdosfs(8) command.

I added (host) and (BE) prefixes to commands so it will now be obvious what is executed and within which context.

Hope that helps.

LikeLiked by 1 person

Ahh, I do not use UEFI and was unaware.

LikeLiked by 1 person

If I am mistaken then please correct me but these should do for MBR and GPT on BIOS boot type – some refer to BIOS mode as CSM or Legacy.

BIOS/MBR

BIOS/GPT

Last time I tried I was not able to boot from ZFS from MBR partitions but maybe that’s changed now but booting ZFS from GPT using BIOS mode works as desired.

Regards.

LikeLike

Good stuff; shouldn’t the bootloader update pull from the mounted BE/boot, not “/boot” which is from the current BE?

Basically:

Of course if you had rebooted into the BE-13 first, then /boot is correct.

LikeLike

Yes. You are right. I probably was not specific enough.

LikeLike

Thank you, great post!

Just wanted to add that when using bectl instead of beadm, it’s better to add the -o option here:

# bectl destroy -o 12.2

This way a lot more space is freed 🙂

LikeLiked by 1 person

Thanks.

Good to know about the -o flag for bectl(8) – mine beadm(8) destroys that origin snapshot by default 🙂

Regards.

LikeLike

254428 – bectl(8) manual page update for bectl destroy, without and with option -o

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=254428

LikeLiked by 1 person

Also, to help minimise the amount of space taken by a boot environment, I run commands such as these before creating the environment:

# pkg autoremove

# pkg clean

(The likelihood of requiring reuse of the cached packages is low.)

LikeLiked by 2 people

Yes, these always save space … and I often forgot to run them 🙂

LikeLike

# sed -i ” ‘/opensolaris/d’ /boot/loader.conf && cat /boot/loader.conf

LikeLiked by 1 person

You are right 🙂

The new OpenZFS in FreeBSD 13.0 does not need opensolaris.ko glue anymore 🙂

LikeLike

… crap … WordPress wrecked that command, altered what I typed. I discovered only after I copied from WordPress. Instead:

https://old.reddit.com/r/freebsd/comments/mrvv77/zpools_not_automounting_after_upgrade_to_130rel/gutb8rn/

Incidentally (not your problem) my Google sign in never persists for WordPress … a real drag 😦 I already made adjustments to browser settings.

LikeLike

Sorry to hear that.

WordPress is definitely not perfect but from available free options I have chosen it over other because of one simple argument – its possible to have a very wide layout/theme so all the code and configuration files will not be wrapped or scrolled – other reasons were far less important to me.

LikeLike

Pingback: Upgrade FreeBSD (ZFS boot environments) | 0ddn1x: tricks with *nix

Manual page representations at https://man.freebsd.org/freebsd-update(8) and https://www.freebsd.org/cgi/man.cgi?query=freebsd-update&sektion=8&manpath=FreeBSD+13.0-current are outdated. Discovered through draft release notes for FreeBSD 13.0-RELEASE: https://cgit.freebsd.org/src/commit/?id=32f4592764d –

PAGER can be set to cat when a non-interactive pager is desired.

LikeLike

Thanks, good to know that.

LikeLike

Pingback: UFS Boot Environments | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: Ambienti di avvio UFS – DailyPlanet360

Pingback: UFS Boot Environments – Excelente Estética

Hi Vermaden,

Thanks for the guide.

anyway after upgrade and boot with freebsd13 rc5, xorg is not working.

so i removed drm-kmod and drm-fbsd12kmod, i am unable to install new port

got an error like this thread.

https://forums.freebsd.org/threads/uname_r-and-osversion-do-not-agree-on-major-version-number.49295/

LikeLike

Hi,

are your /usr/ports tree up to date?

Try to update it with this:

# rm -rf /usr/ports

# portsnap auto

Hope that helps.

LikeLike

Gentle hint: portsnap does not yet provide the most recent updates. We’re still in a period of transition …

https://forums.FreeBSD.org/threads/ports-transitioned-to-git.79598/post-505412

LikeLike

Pity they moved to git(1) before first making portsnap(1) work …

LikeLike

Thx for the reply.

I run into a new problem now. I tried to remove ports then build new one, but still i was unable to update nor install port inside new system.

Then i remove new BE, but now i am unable to boot.

I am using GELI. I have run zpool import and can see my zpool and also dataset is back. But still cant boot, cant find /boot/zfsbootloader, ……

I mount my efi partition, then copy boot.efi from live cd to /boot/efi/bootx64 efi now error i have is failed to find bootable partition.

LikeLiked by 1 person

Strange … I do not now how to help you here … IMHO copy your data to other drive and reinstall.

LikeLike

Do you know, where did the /boot/boot1.efi tradition originate?

https://www.freebsd.org/releases/13.0R/relnotes/#boot recommends (your alternative) copying /boot/loader.efi

Thanks

LikeLike

Before that information appeared on FreeBSD 13.0-RELEASE release notes I found that on many forums and blog posts.

I also copied loader.efi (while making tests) file onto /mnt/efi/boot/bootx64.efi file and it also worked fine.

The man boot page gives some information but not that much either:

LikeLike

Nice post, thanks Vermaden! It’s interesting looking at this coming from a Solaris background, with some experience developing support for zones ‘pkg update’. I expect you’re familiar with that approach, but I’ll mention it here just in case.

Solaris did all of its update/upgrade in a new BE. Each BE captured all the zones (jails, sort of) on the system. Each Solaris instance (zone, or host-OS) was a “pkg image”, with each zone (jail) being a “linked image”. This was helped by the fact that in Solaris Zones on S11, zones inherited important filesystems from the host OS, (so always “sparse root zones”, never “full root” zones like S10 and FreeBSD) which meant that the kernel/libc libraries in zones were always in sync with the host OS. As a result, ‘pkg update’ was able to pull in new packages for all zones in parallel, utilizing a http cache (an Apache proxy cache) in the host OS if neeeded. I wrote about that stuff at https://timsfoster.wordpress.com/2011/11/09/the-ips-system-repository/

I feel spoilt having experienced that sort of support, especially on my recent 12.2 -> 13.0-RELEASE upgrade, where upgrading each of my jails was a bit of a chore after the host was updated. Every time I do this, I wish I’d spent more time working on scripts to simply rebuild all my jails from the ground up, via ansible, etc. but I wish even more that every similar user had that nice experience we had on Solaris.

Maybe sometime, I’ll take an axe to /usr/sbin/freebsd-update and see if I can get a more holistic experience to work 🙂

Thanks again for the blog posts, they’re awesome.

LikeLiked by 1 person

Thanks mate 🙂

When I gathered knowledge and materials for my ZFS Boot Environments presentations (most recent here – https://is.gd/BECTL) I got that Solaris makes updates in new BE and not in the currently running one like FreeBSD does.

From all UNIX systems besides BSDs I treat Solaris (and later OpenSolaris … and now Illumos) as the other best UNIX out there. It has really killer features (zones/SMF/network stack/network virtualization/RBAC with root role/ZFS/IPS/… and so many more) and it has a lot of modern and well think out solutions. SUN really pushed the boundaries with Solaris solutions. I really miss that xVM project went dead (Solaris with XEN) – I really liked it and used it in the past on oldschool Intel Q6600 quad core. Solaris development did not stopped in 1995 (just a sarcasm) like for HP-UX or IBM AIX. While Solaris has IPS (pkg) the very big news several years ago was that someone ported yum(8) from Linux to AIX to allow adding rpm(8) packages from a repository with dependencies … and I do not even have to mention that rpm(8) packages are kinda bastard things on IBM AIX systems … using entirely alien technology from Linux just to fill missing bits because ‘native’ IBM AIX packages are still in 1995 mentality … even Slackware packages are more usable then AIX or HP-UX packages.

While I agree with Solaris way here that ‘system’ upgrade should be done in new BE (like in my article) the applications packages is whole different story. I do not want to reboot every time to new BE I want to have Firefox or Nginx updated. I just pkg upgrade packages on running FreeBSD system and then execute checkrestart command to check which applications or daemons needs to be restarted because they either have newer binaries or use older libraries. This is also a place where FreeBSD Base System concept is that great. You have choice.

I mentioned that Solaris vs FreeBSD update difference in that ZFS Boot Environments presentations (most recent here – https://is.gd/BECTL) since page 46 and even provided instructions how to mimic the Solaris way on FreeBSD.

About the ‘full root’ and ‘sparse root zones’ I agree with you. When you have several older FreeBSD Jails it should be easier – more straight forward – to update selected subset of them (or all) automatically … or with one process instead of separate process for each Jail. Some Jails management tools address this problem – ezjail / iocage / bastille – to name a few for example. Not sure which one does that task best as I mostly use ‘temporary’ Jails for testing purposes and the Jails that I run in production are several and not several hundred so that problem never really grow on me.

One thing that I regret the most is that SUN did not make it and Oracle happened to it.

Regards.

LikeLiked by 1 person

Yup, all good. Thanks for the links.

One clarification: Solaris ips packages had a “reboot-needed” attribute that would require you install those to a non-live BE, so e.g. the kernel package had that attribute, but application packages usually didn’t, so you got the best of both worlds.

LikeLiked by 1 person

Did not knew that, thanks.

LikeLike

One issue, and I ran into it because my laptop is GELI encrypted, is instead of copying /boot/boot1.efi to /mnt/efi/boot/bootx64.efi, you should copy loader.efi. When I copied boot1.efi on my laptop, it would not detect GELI, and couldn’t boot. So I downloaded the FreeBSD 13-RELEASE memstick image, booted on that, and copied loader.efi to /mnt/efi/boot/bootx64.efi, and was back in business. Thanks to RhodiumToad on #freebsd on irc for his help.

LikeLike

Thank you – I will update the post right away.

LikeLike

Pingback: Quare FreeBSD? | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: Moving zroot/usr/local to zroot/usr – Dan Langille's Other Diary

Pingback: Updating my FreeBSD 12.2 host to FreeBSD 13.0 – Dan Langille's Other Diary

I’m struggling with why /usr/local isn’t in the BE. If it was, then picking a BE would also get all the correct pkg installs too. It really feels like it should be in the BE, since some startup elements from /usr/local/etc/rc.d (like bind, dhcpd, etc) fail if you “pkg upgrade” after a freebsd-update, but then have to fall back to a previous BE. For now, I’m snapshotting /usr/local everytime I create a new BE.

LikeLike

Well … /usr/local and /var/db/pkg are tied together as /usr/local is the PREFIX for the installed packages and the metadata information for these installed packages are kept under /var/db/pkg directory. Keep in mind to also backup or snapshot this one.

IMHO having separate /usr/local make BE useful only for FreeBSD Base System updates or making a lot of manual snapshoting to make sure you have snapshots or backups.

I will not discourage you from having separate /usr/local directory – but as I stated before – you are making your life harder this way.

Hope that helps.

LikeLike

I guess I’m confused. On my system /usr/local is a separate filesystem, not in the BE (unlike /usr, for example). I did not do this on purpose, it’s just the way the FreeBSD installer set up the hierarchy. I believe this machine started with FreeBSD 10 and has just been upgraded to from there (to FreeBSD 13 now). Are you saying that current installers put /usr/local on / (in the BE)?

Thanks for the tip about /var/db/pkg, I did forget that. But I doubt I would use the “old” BE for very long. Just long enough to figure out what went wrong with the upgrade and try it again.

LikeLike

Maybe /usr/local was created as a separate filesystem – now its part of BE.

With zfs rename -u command you can ‘move’ a filesystem from and/or to BE if that is needed.

Hope that helps.

LikeLike

Installers don’t create a separate zroot/usr/local

/usr/local is part of zroot/usr

This may be the code you want to read: https://github.com/freebsd/freebsd-src/blob/master/usr.sbin/bsdinstall/scripts/zfsboot#L136

LikeLike

Installers don’t create a separate zroot/usr/local

/usr/local is part of zroot/usr

This may be the code you want to read: https://github.com/freebsd/freebsd-src/blob/master/usr.sbin/bsdinstall/scripts/zfsboot#L136

LikeLiked by 1 person

Thanks Dan. Not sure how I ended up with a seperate zroot/usr/local, but I agree that it would be better to be in the BE. The zfs rename that vermaden suggested won’t move the filesystem into the BE. If I understand BE’s correctly, then I have to move all the files from zroot/usr/local to zroot/ROOT/default (the currently active BE).

rsync -aHS /usr/local /usr/local-new

zfs rename zroot/usr/local zroot/usr/local-old

mv /usr/local-new /usr/local

reboot # force any running services to switch to the new /usr/local

It would be better to do this in single user mode, but I don’t feel like going through that hassle with a remote machine, and I don’t think there are any services running out of /usr/local that matter.

LikeLike

Pingback: ZFS Boot Environments Revolutions | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗