I brought up this topic in the past. It was in the form of more theoretical Silent Fanless FreeBSD Desktop/Server post and more hands-on Silent Fanless FreeBSD Server – DIY Backup article.

One of the comments after the latter was that I compared non-redundant backup solution (single disk) to redundant backup in the cloud. Today – as this is my main backup system – I would like to show you redundant backup solution with two disks in ZFS mirror along with real power usage measurements. This time I got ASRock J3355B-ITX motherboard with only 10W TDP which includes 2-core Celeron J3355 2.0-2.5 GHz CPU and small shiny REALAN H80 Mini ITX case. It looks very nice and comes from AliExpress at very low $33 price for new unit along with free shipping.

Build

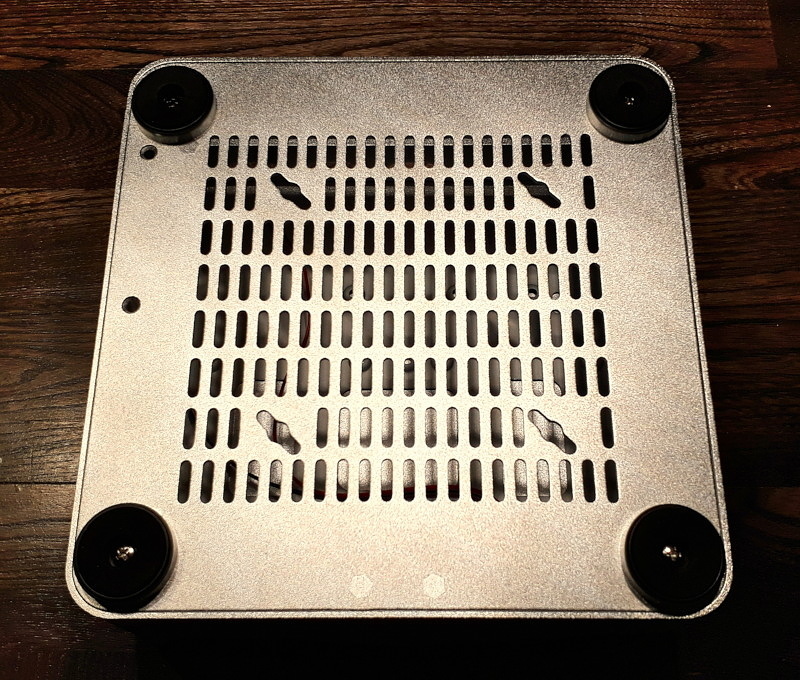

Here is how the REALAN H80 case looks like.

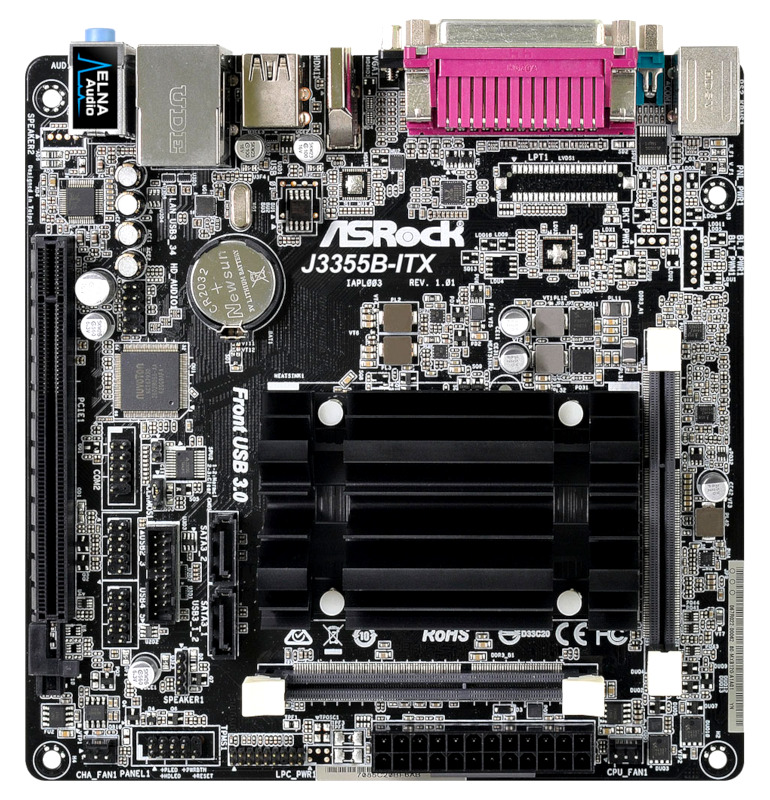

The ASRock J3355B-ITX motherboard.

Same as with the earlier build the internal Seagate BarraCuda 5TB 2.5 SATA drives costs about $200. The same Seagate Backup Plus 5TB 2.5 disk in external case with USB 3.0 port costs nearly half of that price – only $120 – at least in the Europe/Poland location. I took the decision to buy external ones and rip off their cases. That saved me about $160.

Here is the simple performance benchmark of these 2.5 disks.

% which pv pv: aliased to pv -t -r -a -b -W -B 1048576 % pv < /dev/ada0 > /dev/null 1.35GiB 0:00:10 [ 137MiB/s] [ 137MiB/s] ^C % dd if=/dev/ada0 of=/dev/null bs=8M 127+0 records in 127+0 records out 1065353216 bytes transferred in 7.494081 secs (142159287 bytes/sec) ^C

About 135MB/s per disk.

The ripped of parts of Seagate Backup Plus USB cases.

What made me laugh was that as I got different cases colors (silver and gray) the disks inside also had different colors (green and blue) 🙂

… but their part number is the same, here they are mounted on a REALAN H80 disks holder.

For the record – several REALAN H80 case real shots (not renders). First its front.

Back.

Side with USB port.

Bottom.

Top.

Case parts.

Generally the REALAN H80 looks really nice. Little lower REALAN H60 (without COM slots/holes in the back) looks even better but I wanted to make sure that I will have room and space for hot air in that case – as space was not a problem for me.

Cost

The complete price tops at $220 total. Here are the parts used.

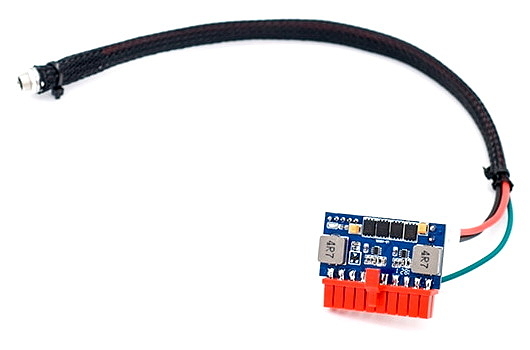

PRICE COMPONENT $49 CPU/Motherboard ASRock J3355B-ITX Mini-ITX $10 RAM 4GB DDR3 $13 PSU 12V 7.5A 90W Pico (internal) $2 PSU 12V 2.5A 30W Leader Electronics (external) $33 Supermicro SC101i $3 SanDisk Fit 16GB USB 2.0 Drive (system) $120 Seagate 5TB 2.5 drive (ONE) $120 Seagate 5TB 2.5 drive (TWO) $350 TOTAL

That is $110 for the ‘system’ and additional $240 for ‘data’ drives.

Today I would probably get the ASRock N3150DC-ITX or Gigabyte GA-N3160TN motherboard instead because of builtin DC jack slot (compatible with 19V power adapter) on its back. This will eliminate the need for additional internal Pico PSU power supply …

The ASRock N3150DC-ITX with builtin DC jack.

The Gigabyte GA-N3160TN with builtin DC jack.

The Gigabyte GA-N3160TN is also very low profile motherboard as you can see from the back.

It may be good idea to use this one instead ASRock N3150DC-ITX to get more space above the motherboard.

PSU

As in the earlier Silent Fanless FreeBSD Server – DIY Backup article I used small 12V 2.5A 30W compact and cheap external PSU instead of the large 90W PSU from FSP Group. As these low power motherboard does not need a lot of power.

New Leader Electronics PSU label.

The internal power supply is Pico PSU which now tops as 12V 7.5A 90W power.

Power Consumption

I also measured the power consumption with power meter.

The whole box with two Seagate BarraCuda 5TB 2.5 drives for data on ZFS mirror and SanDisk 16GB USB 2.0 system drive used about 10.4W in idle state.

I used all needed settings from my earlier The Power to Serve – FreeBSD Power Management article with CPU speed limited between 0.4GHz and 1.2GHz.

The powerd(8) settings in the /etc/rc.conf file are below.

powerd_flags="-n hiadaptive -a hiadaptive -b hiadaptive -m 400 -M 1200"

I used python(1) [1] to load the CPU and dd(8) to load the drives. I used dd(8) on the ZFS pool so 1 disk thread will read [2] and write [3] from/to both 2.5 disks. I temporary disabled LZ4 compression for the write tests.

[1] # echo '999999999999999999 ** 999999999999999999' | python [2] # dd if=/dev/ada0 of=/dev/null bs=1M [2] # dd if=/dev/ada1 of=/dev/null bs=1M [3] # dd if=/dev/zero of=/data/FILE bs=1M

POWER CPU LOAD I/O LOAD 10.4 W IDLE IDLE 12.9 W IDLE 1 DISK READ Thread(s) 14.3 W IDLE 1 DISK READ Thread(s) + 1 DISK WRITE Thread(s) 17.2 W IDLE 3 DISK READ Thread(s) + 3 DISK WRITE Thread(s) 11.0 W 8 CPU Thread(s) IDLE 13.4 W 8 CPU Thread(s) 1 DISK READ Thread(s) 15.0 W 8 CPU Thread(s) 1 DISK READ Thread(s) + 1 DISK WRITE Thread(s) 17.8 W 8 CPU Thread(s) 3 DISK READ Thread(s) + 3 DISK WRITE Thread(s)

That’s not much remembering that 6W TDP power motherboard ASRock N3150B-ITX with just single Maxtor M3 4TB 2.5 USB 3.0 drive used 16.0W with CPU and I/O loaded. Only 1.8W more (on loaded system) with redundancy on two 2.5 disks.

Commands

The crypto FreeBSD kernel module was able to squeeze about 68MB/s of random data from /dev/random as this CPU has built in hardware AES-NI acceleration. Note to Linux users – the /dev/random and /dev/urandom are the same thing on FreeBSD. I used both dd(8) and pv(1) commands for this simple test. I made two tests with powerd(8) enabled and disabled to check the difference between CPU speed at 1.2GHz and at 2.5GHz with Turbo mode.

Full speed with Turbo enabled (note 2001 instead of 2000 for CPU frequency)..

# /etc/rc.d/powerd stop Stopping powerd. Waiting for PIDS: 1486. % sysctl dev.cpu.0.freq dev.cpu.0.freq: 2001 % which pv pv: aliased to pv -t -r -a -b -W -B 1048576 % dd /dev/null 1.91GiB 0:00:31 [68.7MiB/s] [68.1MiB/s] 265+0 records in 265+0 records out 2222981120 bytes transferred in 33.566154 secs (70226864 bytes/sec) ^C

CPU limited to 1.2GHz with powerd(8) daemon was able to squeeze about 24MB/s.

# service powerd start Starting powerd. % which pv pv: aliased to pv -t -r -a -b -W -B 1048576 % dd /dev/null 568MiB 0:00:23 [25.3MiB/s] [24.7MiB/s] 71+0 records in 71+0 records out 595591168 bytes transferred in 23.375588 secs (25479195 bytes/sec ^C

Below I will show you the data from dmesg(8) about the used USB and 2.5 drives.

The dmesg(8) information for the SanDisk Fit USB 2.0 16GB drive.

# grep da0 /var/run/dmesg.boot da0 at umass-sim1 bus 1 scbus3 target 0 lun 0 da0: Removable Direct Access SPC-4 SCSI device da0: Serial Number 4C530002030502100093 da0: 400.000MB/s transfers da0: 14663MB (30031250 512 byte sectors) da0: quirks=0x2

… and two Seagate BarraCuda 5TB 2.5 drives.

# grep ada /var/run/dmesg.boot ada0 at ahcich0 bus 0 scbus0 target 0 lun 0 ada0: ACS-3 ATA SATA 3.x device ada0: Serial Number WCJ0DRJE ada0: 600.000MB/s transfers (SATA 3.x, UDMA6, PIO 8192bytes) ada0: Command Queueing enabled ada0: 4769307MB (9767541168 512 byte sectors) ada1 at ahcich1 bus 0 scbus1 target 0 lun 0 ada1: ACS-3 ATA SATA 3.x device ada1: Serial Number WCJ0213S ada1: 600.000MB/s transfers (SATA 3.x, UDMA6, PIO 8192bytes) ada1: Command Queueing enabled ada1: 4769307MB (9767541168 512 byte sectors)

The whole /var/run/dmesg.boot content (without disks) is shown below.

# cat /var/run/dmesg.boot

Copyright (c) 1992-2018 The FreeBSD Project.

Copyright (c) 1979, 1980, 1983, 1986, 1988, 1989, 1991, 1992, 1993, 1994

The Regents of the University of California. All rights reserved.

FreeBSD is a registered trademark of The FreeBSD Foundation.

FreeBSD 11.2-RELEASE-p7 #0: Tue Dec 18 08:29:33 UTC 2018

root@amd64-builder.daemonology.net:/usr/obj/usr/src/sys/GENERIC amd64

FreeBSD clang version 6.0.0 (tags/RELEASE_600/final 326565) (based on LLVM 6.0.0)

VT(vga): resolution 640x480

CPU: Intel(R) Celeron(R) CPU J3355 @ 2.00GHz (1996.88-MHz K8-class CPU)

Origin="GenuineIntel" Id=0x506c9 Family=0x6 Model=0x5c Stepping=9

Features=0xbfebfbff

Features2=0x4ff8ebbf

AMD Features=0x2c100800

AMD Features2=0x101

Structured Extended Features=0x2294e283

XSAVE Features=0xf

VT-x: PAT,HLT,MTF,PAUSE,EPT,UG,VPID,VID,PostIntr

TSC: P-state invariant, performance statistics

real memory = 4294967296 (4096 MB)

avail memory = 3700518912 (3529 MB)

Event timer "LAPIC" quality 600

ACPI APIC Table:

WARNING: L1 data cache covers less APIC IDs than a core

0 < 1

FreeBSD/SMP: Multiprocessor System Detected: 2 CPUs

FreeBSD/SMP: 1 package(s) x 2 core(s)

ioapic0 irqs 0-119 on motherboard

SMP: AP CPU #1 Launched!

Timecounter "TSC" frequency 1996877678 Hz quality 1000

random: entropy device external interface

kbd1 at kbdmux0

netmap: loaded module

module_register_init: MOD_LOAD (vesa, 0xffffffff80ff4580, 0) error 19

random: registering fast source Intel Secure Key RNG

random: fast provider: "Intel Secure Key RNG"

nexus0

vtvga0: on motherboard

cryptosoft0: on motherboard

acpi0: on motherboard

unknown: I/O range not supported

cpu0: on acpi0

cpu1: on acpi0

attimer0: port 0x40-0x43,0x50-0x53 irq 0 on acpi0

Timecounter "i8254" frequency 1193182 Hz quality 0

Event timer "i8254" frequency 1193182 Hz quality 100

atrtc0: port 0x70-0x77 on acpi0

atrtc0: Warning: Couldn't map I/O.

atrtc0: registered as a time-of-day clock, resolution 1.000000s

Event timer "RTC" frequency 32768 Hz quality 0

hpet0: iomem 0xfed00000-0xfed003ff irq 8 on acpi0

Timecounter "HPET" frequency 19200000 Hz quality 950

Event timer "HPET" frequency 19200000 Hz quality 550

Event timer "HPET1" frequency 19200000 Hz quality 440

Event timer "HPET2" frequency 19200000 Hz quality 440

Event timer "HPET3" frequency 19200000 Hz quality 440

Event timer "HPET4" frequency 19200000 Hz quality 440

Event timer "HPET5" frequency 19200000 Hz quality 440

Event timer "HPET6" frequency 19200000 Hz quality 440

Timecounter "ACPI-fast" frequency 3579545 Hz quality 900

acpi_timer0: port 0x408-0x40b on acpi0

pcib0: port 0xcf8-0xcff on acpi0

pci0: on pcib0

vgapci0: port 0xf000-0xf03f mem 0x90000000-0x90ffffff,0x80000000-0x8fffffff irq 19 at device 2.0 on pci0

vgapci0: Boot video device

hdac0: mem 0x91210000-0x91213fff,0x91000000-0x910fffff irq 25 at device 14.0 on pci0

pci0: at device 15.0 (no driver attached)

ahci0: port 0xf090-0xf097,0xf080-0xf083,0xf060-0xf07f mem 0x91214000-0x91215fff,0x91218000-0x912180ff,0x91217000-0x912177ff irq 19 at device 18.0 on pci0

ahci0: AHCI v1.31 with 2 6Gbps ports, Port Multiplier supported

ahcich0: at channel 0 on ahci0

ahcich1: at channel 1 on ahci0

pcib1: irq 22 at device 19.0 on pci0

pci1: on pcib1

pcib2: irq 20 at device 19.2 on pci0

pci2: on pcib2

re0: port 0xe000-0xe0ff mem 0x91104000-0x91104fff,0x91100000-0x91103fff irq 20 at device 0.0 on pci2

re0: Using 1 MSI-X message

re0: Chip rev. 0x4c000000

re0: MAC rev. 0x00000000

miibus0: on re0

rgephy0: PHY 1 on miibus0

rgephy0: none, 10baseT, 10baseT-FDX, 10baseT-FDX-flow, 100baseTX, 100baseTX-FDX, 100baseTX-FDX-flow, 1000baseT-FDX, 1000baseT-FDX-master, 1000baseT-FDX-flow, 1000baseT-FDX-flow-master, auto, auto-flow

re0: Using defaults for TSO: 65518/35/2048

re0: Ethernet address: 70:85:c2:3f:53:41

re0: netmap queues/slots: TX 1/256, RX 1/256

xhci0: mem 0x91200000-0x9120ffff irq 17 at device 21.0 on pci0

xhci0: 32 bytes context size, 64-bit DMA

usbus0 on xhci0

usbus0: 5.0Gbps Super Speed USB v3.0

isab0: at device 31.0 on pci0

isa0: on isab0

acpi_button0: on acpi0

acpi_tz0: on acpi0

atkbdc0: at port 0x60,0x64 on isa0

atkbd0: irq 1 on atkbdc0

kbd0 at atkbd0

atkbd0: [GIANT-LOCKED]

ppc0: cannot reserve I/O port range

est0: on cpu0

est1: on cpu1

ZFS filesystem version: 5

ZFS storage pool version: features support (5000)

Timecounters tick every 1.000 msec

hdacc0: at cad 0 on hdac0

hdaa0: at nid 1 on hdacc0

ugen0.1: at usbus0

uhub0: on usbus0

pcm0: at nid 21 and 24,26 on hdaa0

pcm1: at nid 20 and 25 on hdaa0

pcm2: at nid 27 on hdaa0

hdacc1: at cad 2 on hdac0

hdaa1: at nid 1 on hdacc1

pcm3: at nid 3 on hdaa1

uhub0: 15 ports with 15 removable, self powered

ugen0.2: at usbus0

uhub1 on uhub0

uhub1: on usbus0

uhub1: 4 ports with 4 removable, self powered

Trying to mount root from zfs:zroot/ROOT/default []...

random: unblocking device.

re0: link state changed to DOWN

ZFS Pool Configuration

To get higher LZ4 compression ratio I use larger blocksize (1MB) on this ZFS mirror pool. Here is the ZFS pool status.

% zpool status data

pool: data

state: ONLINE

scan: scrub repaired 0 in 44h14m with 0 errors on Mon Feb 11 07:13:42 2019

config:

NAME STATE READ WRITE CKSUM

data ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

label/WCJ0213S ONLINE 0 0 0

label/WCJ0DRJE ONLINE 0 0 0

errors: No known data errors

I get 4% compression (1.04x) on that ZFS pool. Its about 80% filled with lots of movies and photos so while such compression ratio may not be great it gives a lot of space. For example 4% of 4TB of data is about 160GB of ‘free’ space.

% zfs get compressratio data NAME PROPERTY VALUE SOURCE data compressratio 1.04x -

Here is the ZFS pool configuration.

# zpool history History for 'data': 2018-11-12.01:18:33 zpool create data mirror /dev/label/WCJ0229Z /dev/label/WCJ0DPHF 2018-11-12.01:19:11 zfs set mountpoint=none data 2018-11-12.01:19:16 zfs set compression=lz4 data 2018-11-12.01:19:21 zfs set atime=off data 2018-11-12.01:19:34 zfs set primarycache=metadata data 2018-11-12.01:19:40 zfs set secondarycache=metadata data 2018-11-12.01:19:45 zfs set redundant_metadata=most data 2018-11-12.01:19:51 zfs set recordsize=1m data (...)

We do not need redundant_metadata as we already have two disks, its useful only on single disks configurations.

Self Solution Cost

As in the earlier post I will again calculate how much energy this server would consume. Currently 1kWh of power costs about $0.20 in Europe/Poland (rounded up). This means that running computer with 1000W power usage for 1 hour would cost you $0.20 on electricity bill. This system uses 10.4W idle and 12.9W when single disk read occurs. For most of the time server will be idle so I assume 11.0W average for the pricing purposes.

That would cost us $0.0022 for 11.0W device running for 1 hour.

Below you will also find calculations for 1 day (24x multiplier), 1 year (another 365.25x multiplier) and 3 years (another 3x multiplier).

COST TIME $0.0022 1 HOUR(s) $0.0528 1 DAY(s) $19.285 1 YEAR(s) $57.856 3 YEAR(s) $96.426 5 YEAR(s)

Combining that with server cost ($350) we get TCO for our self hosted 5TB storage service.

COST TIME $369.29 1 YEAR(s) $407.86 3 YEAR(s) $446.43 5 YEAR(s)

Our total 3 years TCO is $407.86 and 5 years is $446.43. Its for running system non-stop. We can also implement features like Wake On LAN to limit that power usage even more.

Cloud Storage Prices

This time after searching for cheapest cloud based storage I found these services.

- Amazon Drive

- Amazon S3 Glacier Storage

- Backblaze B2 Cloud Storage

- Google One

- Dropbox Basic

Here is its cost summarized for 1 year period for 5TB of data.

PRICE TIME SERVICE $300 1 YEAR(s) Amazon Drive $310 1 YEAR(s) Google One $240 1 YEAR(s) Amazon S3 Glacier Storage $450 1 YEAR(s) Backblaze B2 Cloud Storage $360 1 YEAR(s) Dropbox Basic

For the Backblaze B2 Cloud Storage I assumed average between upload/download price because upload is two times cheaper then download.

Here is its cost summarized for 3 year period for 5TB of data.

PRICE TIME SERVICE $900 3 YEAR(s) Amazon Drive $930 3 YEAR(s) Google One $720 3 YEAR(s) Amazon S3 Glacier Storage $1350 3 YEAR(s) Backblaze B2 Cloud Storage $1080 3 YEAR(s) Dropbox Basic

Here is its cost summarized for 5 year period for 5TB of data.

PRICE TIME SERVICE $1500 5 YEAR(s) Amazon Drive $1550 5 YEAR(s) Google One $1200 5 YEAR(s) Amazon S3 Glacier Storage $2250 5 YEAR(s) Backblaze B2 Cloud Storage $1800 5 YEAR(s) Dropbox Basic

Now lets compare costs of our own server to various cloud services.

If we would run our server for just 1 year the price will be similar.

PRICE TIME SERVICE

$369 1 YEAR(s) Self Build NAS

$300 1 YEAR(s) Amazon Drive

$310 1 YEAR(s) Google One

$240 1 YEAR(s) Amazon S3 Glacier Storage

$450 1 YEAR(s) Backblaze B2 Cloud Storage

$360 1 YEAR(s) Dropbox Basic

It gets interesting when we compare 3 years costs. Its two times cheaper to self host our own server then use cloud services. One may argue that clouds are located in many places but even if we would buy two such boxes and put one – for example in our friends place at Jamaica – or other parts of the world.

PRICE TIME SERVICE

$408 3 YEAR(s) Self Build NAS

$528 3 YEAR(s) Self Build NAS (assuming one of the drives failed)

$900 3 YEAR(s) Amazon Drive

$930 3 YEAR(s) Google One

$720 3 YEAR(s) Amazon S3 Glacier Storage

$1350 3 YEAR(s) Backblaze B2 Cloud Storage

$1080 3 YEAR(s) Dropbox Basic

… but with 5 years using cloud service instead of self hosted NAS solution is 3-5 times more expensive … and these were the cheapest cloud services I was able to find.

PRICE TIME SERVICE

$447 5 YEAR(s) Self Build NAS

$567 5 YEAR(s) Self Build NAS (assuming one of the drives failed)

$1500 5 YEAR(s) Amazon Drive

$1550 5 YEAR(s) Google One

$1200 5 YEAR(s) Amazon S3 Glacier Storage

$2250 5 YEAR(s) Backblaze B2 Cloud Storage

$1800 5 YEAR(s) Dropbox Basic

… and ‘anywhere’ access is not an argument for cloud services because you can get external IP address for you NAS or use Dynamic DNS – for free. You may also wonder why I compare such ‘full featured NAS’ with S3 storage … well with rclone (rsync for cloud storage) you are able to synchronize your files with almost anything 🙂

Not to mention how much more privacy you have with keeping all your data to yourself … but that is priceless.

You can also setup a lot more services on such hardware – like FreeNAS with Bhyve/Jails virtualization … or Nextcloud instance … or Syncthing … while cloud storage is only that – a storage in the cloud.

Summary

Not sure what else could I include in this article. If you have an idea what else could I cover then let me know.

Nice article, great solution for home/personal use.

I can see why the issue of redundancy and ‘anywhere’ access came up, it’s still going to be a concern if this were for a business setup or critical data.

The issues that will come up in this scenario are.

1: If you were away and experience power loss or internet outage at the site, how do you reach your data?

2. If motherboard or power components crap out, how fast can you get the parts and be back online?

That’s probably two things to consider if comparing with cost of cloud backup, one will need a second machine off-site if this were to be considered replacement for cloud backup. That way you’re also safe from the worst case scenario (theft or natural disasters).

Great work though.

LikeLike

Thank you.

I treat it as home/personal solution. I will probably use something different for real business/enterprise needs.

LikeLike

Very interesting solution and your measurements are very helpful to get an idea of TCO and how choosing the right components affects it.

I beg to somehow disagree with some of your calculations and statements.

I will just assume that the work to set up and maintain the whole thing is not a cost or burden to be included in the TCO because this solution is only for techie geeks.

If we are using this as our main storage, not (just) as backup, then the “access anywhere” becomes important and I agree with @Michael that you would need at least a UPS, a redundant machine and a redundant internet connection and still you wouldn’t match the availability of cloud storage. While TCO would likely skyrocket. Or you’d need a second machine at your friend’s in Jamaica who also must have some more than decent connectivity… not sure that many have such a friend. I don’t and even if I did, I would not sleep well thinking that I would need to monitor and maintain that machine remotely… even when a part breaks or the thing looses its connectivity.

Nowadays everyone seems so fixated with redundant storage as in redundant disks, probably the same people who raised this topic with you. Disk redundancy is relatively common since it’s relatively cheap and easy to set up nowadays. But let’s first remember that redundancy is just for high availability (I can continue working even if something breaks), not for backup (I can recover data I lost). Considering today’s disks reliability vs other components (e.g. motherboard, power supply, even software), if you do need high availability, you’d be far better off with 2 machines with 1 disk each rather than with 1 machine with 2 disks. But then that would be more expensive and far more complex to make it work seamlessly (I really can continue working if something breaks, without stopping to reconfigure addresses and the like).

If we’re talking about backup, then, again along @Michael line, we should consider the 3-2-1 rule: 3 copies of your data, of which 2 are backups on different media, of which 1 is stored offsite in case of disaster. At home I have 1 NAS for live storage, another 1 for backup (well, the equivalent of your server actually) and also backup in the cloud… because I don’t have that friend in Jamaica to give him another server.

Someone could easily choose to go for cloud storage only, with a provider that takes care of the 3-2-1 strategy and the ability to self restore previous versions of files. That is if they didn’t need quick access to multiple TB of their data regardless of the speed and availability of their internet connection.

When you calculate costs in your article, you always take into consideration the “allocated” storage capacity: “I think I will need 4TB in 5 years time, so I buy 5TB of storage now to be on the safe side”. Glacier and Backblaze B2 are “pay what you use”, so I might end up paying maybe for 2 TB in the 1st year, then ramping smoothly up to 4 TB at the end of the 5th year.

Having said that, I do think your set up is nice… I do use 2 NASes at home, which conceptually are the same thing. It is also less expensive than cloud storage, particularly if you need multiple TB of that. But a home server is never going to match the nearly 0 effort for set up and maintenance, the uptime and the everywhere availability of cloud storage. If you really wanted to go anywhere near that, you’d have to spend many times as much.

LikeLike

Thanks.

I actually use an UPS with this box – mostly to protect it from power outages and surges.

Yes, its home/personal solution, I can not compete with cloud provider 🙂

The other box I use is described in the earlier ‘episode’ – https://vermaden.wordpress.com/2018/08/28/silent-fanless-freebsd-server-diy-backup/ – I used two disks in the ‘main’ place to make sure that this data is – like you said – more available when one of the disks fail – to not have to worry about a disk loss and be without ‘fast’ access to data.

Besides these two boxes I also have another offline copy it that counts 🙂 Its not updated that much frequently – but a week lost will not hurt me that much – considering that both backup boxes (3 disks) will be dead at the same time – and they are in different locations.

The biggest concern for me is ‘privacy’ here. Maybe if I will only sent encrypted backups to these cloud providers I will sleep well but that will require extra work from me to split it intelligently between various providers so no one of them has all parts to access the data … something like ZFS RAIDZ2 (RAID6) pool with ‘disk files’ split in 6 cloud providers – seems like a good idea for next blog post :p

Yes, calculations are not perfect – but quite close to what I already have – as I have about 2.8-3.0 TB of data. Even if we will cut 1/3 of the cloud providers costs it will still be cheaper in 3 and 5 years to use self solution NAS.

I think that maintenance is biggest at the beginning/creating of the first self NAS – to think thought everything – to setup everything and so on. With next box or NAS replacement you already have idea/configs/etc so it becomes ‘natural’ to just repeat the process – at least for me.

I am not trying to be more available then cloud providers – and their costs are lower and lower each year – but even when the motherboard fails I can still attach the drives to laptop with an adapter – with major outage in the cloud provider you do not have access to your data – but I dunno how often such things happen as I rarely use cloud services.

Regards,

vermaden

LikeLiked by 1 person

One more thing to add is, either for business use or personal use. On site backup has it’s benefits.

You don’t want to wait hours to get your data back.

In all situations, one has to assess the needs if cloud backup or a mix of cloud and onsite backup is best…

LikeLike

Yep, I would eventually treat cloud storage as additional offsite backup – definitely not primary backup place 🙂

LikeLiked by 1 person

Love your article and thanks for sharing such a nice and fun project!

Would like to add my 2 cents:

I work with SOHO clients and their biggest complaint with online storage is the speed, bad/unreliable/intermittent connections and how their ISP charges them when they go over their capped bandwidth. Internet connection costs and over usage penalty should be factored for users that saves big data. If they’re in the boonies, your project is the clear winner.

I still see people who totally don’t trust the cloud especially when they’ve been hacked. Having local storage offer peace of mind to these folks and that’s one thing cloud providers can never compete.

As one reader astutely pointed out, if you need your big data backup right away, you’re in trouble. Time is money and value is calculated case by case. Biz downtime cost varies but can be expensive, from $100/hr to $20K/hr.

You can turn on transparent disk compression to increase your storage. My clients’ data are very highly compressible, up to 75%. Let’s even say that your average data compression is 50%. You just divided your cost by half and doubled your capacity. That’s how these cloud providers make their killing. Not much joy for multimedia users and again, they complain their Internet is slow/intermittent and refuses to pay more for an upgrade.

ZFS has bit-rot protection. I have so many clients where their files spontaneously develop silent data corruption with online providers such as Dropbox. Kudos to Backblaze though with their Reed-Solomon ECC.

You can schedule a sleep/wake working hours or tell the drives to go to sleep and as you said, wake-up on LAN. Rotational drives are getting ridiculously cheaper and less power hungry. Would love to see your creativity work on ultra low power SBC (Single Board Computers) in the near future.

You can run constant virus checker and virtual machines in your server. Clients just love how I turn their old laptops/smart phones/tablets into terminals, cast to smart TV and work with never ending emails and office suites. Try that with cloud storage.

As in any case, there are always drawback and the biggest show stopper is if the drive fails within 5 years, which is highly likely. That cost has to be factored in. I see them failing within 1st year and if they cross that mark, then they fail after 3 – 4 years. I haven’t seen a main board fail for over 5 years and since you have the fanless power supply, it’s a very robust system.

Keep those creative juices of yours flowing.

Best,

Burt

LikeLike

Thanks 🙂

Thanks for pointing that out – I did not thought about that when compared my ‘little’ setup with cloud services.

My experience with SBC is not great but before using Mini ITX solutions such as described in this article I used Raspberry PI 2B with 2.5 USB drive for the offsite backups – I described my experiences here – https://vermaden.wordpress.com/2018/08/28/silent-fanless-freebsd-server-diy-backup/ – in the Ancestor paragraph, will repaste it here for convenience.

// PASTE BEGIN

Before this setup I used Raspberry Pi 2B with external Western Digital 2TB 2.5 USB 3.0 disk but the storage space requirements become larger so I needed to increase that. It was of course with GELI encryption and ZFS with enabled LZ4 compression on top. The four humble ARM32 cores and soldered 1GB of RAM was able to squeeze whooping 5MB/s read/write experience from this ZFS/GELI setup but that was not hurting me as I used rsync(1) for differential backups and the Internet connection to that box was limited to about 1.5MB/s. I would still use that setup but it just won’t boot with that larger Maxtor M3 4TB disk because it requires more power and I already used stronger 5V 3.1A charger then 5V 2.0A suggested by vendor. Even the safe_mode_gpio=4 and max_usb_current=1 options at /boot/msdos/config.txt did not help.

// PASTE END

I also recently got ODROID-C2 board with 2 GB RAM to serve ‘movie’ content to my TV. The only reason for such solution is that FreeBSD 11.2/12.0 currently has problem with HDMI output on this motherboard – no output at all. I could try to find motherboard on which it would work or I could get ‘external’ solution – which is the road I took. Its probably only Linux used by me – the LibreELEC dedicated KODI distribution which mounts ‘movies’ from main NAS/backup box in read only mode over CIFS and plays them – also nothing spectacular here 🙂

Also great point here, thanks.

Agreed. The biggest problem (or the mots risky part) of this system are drives.

Thanks 🙂

LikeLike

Excellent write up! It is pretty cheap but still powerful enough little machine. I am curious how it performs and what the power consumption is when clock speeds aren’t limited with powerd.

Regarding TCO calculations, if we ignore all other parameters and forget about maintenance I would still disagree about some calculations. I doubt that all of the 5TB of space will be used up immediately, and this is where most of the people (including me) go wrong when comparing with cloud storage pricing.

Advantage for services like S3 is that you don’t have to commit upfront, instead, you’re just billed by the usage. Combine that with storage tiers and you can get pretty cheap solution in the cloud as well.

That being said, having machine at home is more than fine. Privacy, physical security and much more if you don’t trust your cloud provider. And local network is most likely far faster than ISP link you have at home (unless you’re on 1Gbps link or something) 🙂

LikeLike

Thank You.

I will measure that and add an update.

Agreed. I already got about 3TB so in comparison prices you will have to take only 3/5 of the costs – which still makes my little ‘box’ cheaper – but your point is valuable.

Yup 🙂

LikeLike

Great post! Newbie question: what are the factors that determine whether you need fans or not? In other words, if I wanted to build an appliance myself, how do I know that it won’t run too hot if I don’t put any fans in it?

LikeLike

Thanks.

Depends 🙂

Its easier when you get SoC solution like these Mini-ITX boards which have CPU’s soldered on and come with radiator and without fan already. This way you are sure that they do not need any fans. Its mostly about TDP. When its about 5-10W then in most cases can be fanless. Sometimes even at 15-20W can be fanless.

When you build the box with motherboard that has CPU socket you have to stick with CPU TDP.

For Intel CPUs you can use their filters page:

https://ark.intel.com/content/www/us/en/ark/search/featurefilter.html?productType=873

For example Intel CPUs with TDP equal or less 15W filter:

https://ark.intel.com/content/www/us/en/ark/search/featurefilter.html?productType=873&3_MaxTDP-Max=15

Same with AMD CPUs. Go to one of those lists and sort it by TDP column.

https://www.amd.com/en/products/specifications/processors

https://www.amd.com/en/products/specifications/processors/2316

https://www.amd.com/en/products/specifications/embedded

Now, when you will get CPU that will have TDP of 10-15W TDP then you could probably use large passive cooler without a fan. With building such system you must also check various tests/reviews for passive coolers on the marked for the socket you are building such solution.

As I have seen recently people also build passive desktop or gaming systems that have CPUs with TDP 35W or even 60W – they just need larger cooler or as an alternative – one really large slow (and thus really quiet) fan in the case.

For graphics cards its similar with SoC systems – you just get the ‘passive’ version of such graphic card and that’s it – they often come with large passive cooler that takes two to even three PCI slots. The ‘problem’ here is that the highend graphics cards are not available with passive coolers – this is where quiet water cooling solutions come to play.

Hope that helps.

LikeLike

Pingback: Valuable News – 2019/04/08 | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Thanks, that’s really valuable information! One more thing, if I may: would you still recommend a setup with the kind of hardware you show in this post for running multiple services (home-bound, less than ten users), say a media server (e.g., Plex), a web server, file sync (e.g., NextCloud), VPN (e.g., WireGuard),… under FreeBSD? Or would you suggest something more powerful as a general purpose server? Or perhaps a cluster of servers like this?

LikeLike

It depends.

Do all 9 people will at the same time sync their mobile devices to Nextcloud along with 4 of them watching 1080p movies over Plex and 5 others surfing the Internet using WireGuard?

You can use FreeBSD Resource Limits – https://www.freebsd.org/doc/handbook/security-resourcelimits.html – and limit ‘non-interactive’ (Nextcloud/Syncthing/…) applications CPU speed for example (or their Jails) so when they will have less CPU time they will not interfere with interactive services like Plex which should have priority in CPU and DISK time slices.

These these Seagate BarraCuda 5TB drives are SMR drives (slower for writes) which mean that it may be not the best idea to use them in heavy random write environment. I would start with two non-SMR HDD drives in mirror then eventually add/attach USB 3.0 32GB+ (or larger) drive to USB 3.0 port and set it as ZFS L2ARC – read cache – which can help in many cases with slow drives and it will be cheap at the same time. If that would not help you will have to migrate to 2 x 4TB SSD drives (or smaller depending in the needs) – can be the cheaper ones.

You may want to choose motherboard with 4-core CPU (or even 8-core from the link below):

https://www.asrockrack.com/general/productdetail.asp?Model=C2750D4I

… but this is all guessing.

IMHO start with something cheap and small and expand as the need for power grows.

LikeLike

Thanks for the bag of tips! I’ll definitely follow your suggestion and proceed incrementally.

LikeLike

Good luck! Share your results somewhere 🙂

LikeLike

How do the costs compare with the FreeBSD-based service Tarsnap? http://www.tarsnap.com/

LikeLike

Hi,

Tarsnap service – as much as I like the idea and the thing that its run on BSD – its just too expensive.

I have compared Tarsnap and Backblaze costs in the previous article in these ‘Fanless Series’ (at the end):

https://vermaden.wordpress.com/2018/08/28/silent-fanless-freebsd-server-diy-backup/

While storing 4TB of data for 3 years at Backblaze costs about $737 the same storage at Tarsnap would cost $35021 … thus Tarsnap can be valuable for very small backups (in size) only.

Regards,

vermaden

LikeLike

Hi, interesting post!

Any particular reason you use the J3355B instead of the J4105ITX?

LikeLike

Thanks 🙂

I was able to get J3355 for better price then J4105 and I did not needed the extra cores.

If I were able to get J4105 for the similar price as J3355 I would get it instead.

Regards.

LikeLike

Pingback: FreeBSD Enterprise 1 PB Storage | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: Silent Fanless FreeBSD Server – DIY Backup | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: Silent Fanless FreeBSD Desktop/Server | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

For your use case of backup server I think this is not an issue, but what if you wanted to, just for an example, ssh -X in and run Firefox, which was installed on the system disk – wouldn’t it run “slow” because your system disk is a USB thumbdrive? Or are you using a fancy ramdisk setup like FreeNAS or NanoBSD uses? Is your system disk UFS?

LikeLike

The USB drives I got were meant to be small, but You can get really fast USB drives and even USB SSD drives. you can also use the onboard PCI-Express slot with adapter to attach M.2 SSD drive there.

… but even if You would use that USB ‘typical’ drive the Firefox will load and run just fine.

I am using plain FreeBSD system here so no ‘fancy’ ramdisks here, but also nothing prevents you from disabling the Firefox cache ‘on-disk’ and enabling only RAM cache … that should also speed up things … or create ramdisk for Firefox cache if you want to keep it for more.

Regards.

LikeLike

hehe I guess I was reminded of how slow a Raspberry Pi is when running FreeBSD straight off the SD card (as opposed to a NanoBSD install) when I first read your post – great to hear that it runs well, even off a USB thumbdrive.

Very nice write-up, and great catch on those hard drives – it’s the same in Norway, with the 5TB USB drives costing almost 40% less than the bare drive. I’m gonna build me one of these!

Cheers!

LikeLike

The only process that takes little longer then on SSD drive (to which I am used to) are heavy small files operations like freebsd-update or portsnap fetch update commands.

Thanks, good to know that its not that strange only in Poland 🙂

Regards.

LikeLike

Hi Vermaden,

i wish you a successfull new year. I really like your Website, i learn a lot of you. My question is, i want build a setup like this in your article here. I want build a backup server with a samba share for my Kodi Raspi on my TV. Have you an recommendation for a 3 HDD setup. Thabks you can write me a pm via email 🙂 Big Thanks

LikeLike

Thanks.

Email sent 🙂

Regards.

LikeLike

Pingback: Sensors Information on FreeBSD | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: Sensors Information on FreeBSD – Perfect Trading — Fachwissen von Profis

Pingback: ZFS on SMR Drives | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Do you do any kind of “flash optimization” when installing your rootfs on a USB drive ?

Asking because my local backup is a NUC7 with a 4TB 2.5” Seagate barracuda “mashed inside”, and a 16GB Sandisk Cruzer Fit for its boot drive, and while it has been running well for years, I wonder if I should have done something differently. It runs as my second local backup, and backs up data from my server (which is where clients backup).

Also, regarding clouds, it seems you simply use ZFS snapshots (hence the need for GELI encrypted disks), and that is a proven solution that works. For my use case I am backing up client computers, and instead rely on source encryption, so encryption of the drive doesn’t matter. The main difference is that I use Minio to provide an S3 compatible endpoint on the backup server (as well as samba for timemachine/windows versions).

The backup software has varied over time, but I tend to use software that is either built in, or has a GUI to get proper interaction with users when stuff breaks. Currently using a mix of Arq Backup (Mac/windows), Timemachine (Mac), and Kopia. Timemachine for local backups (local USB drive), Arq for remote backups (and the NUC).

Kopia is currently only in testing. It looks promising, and I use it for backing up configuration and other data I can recreate from scratch if need be, as well as maintain a “shadow” backup of the same data that is being backed up by my normal tools.

LikeLiked by 1 person

Hi,

to be honest – I do not – these USB drives are not heavy used – just OS there (without SWAP).

None the less – the Sandisk Cruzer Fit drives died – all 3 of them (2 in one system – 1 in another). Since then I moved to Lexar S47 32GB drives (32GB is smallest size available) – and they do not die – so I definitely recommend them instead of Sandisk ones.

I use rsync(1) for transfer the data but I also use ZFS snapshots to ‘hold’ some files/history when needed.

I have used Minio in the past and it works great on FreeBSD – even in its Distributed Mode. I like Minio but I often do ‘minor’ changes – thus rsync(1) makes sense for me. I also sometimes use FAT32/exFAT drives for some various purposes – that will not allow me to use ZFS snapshots with zfs send|zfs recv but that is why I use rsync(1) – because it works with everything and always – even on Winblows.

… but for some ‘defined’ backups Minio/S3 seems like a perfect fit to be honest.

And thanks for mentioning Kopia – I did not knew that – will have to look at it 🙂

Regards.

LikeLike

Pingback: Silent Fanless Dell Wyse 3030 LT FreeBSD Server | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: AMD Based FreeBSD Desktop | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗

Pingback: Tiny UPS for Tiny NAS | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗